| [Oshita Lab.][Research Theme] | [Japanese] |

|

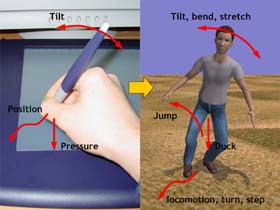

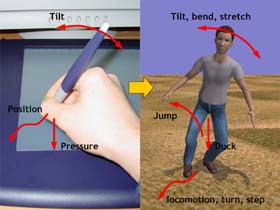

| Pen-based motion control interface. |

This paper presents a pen-based intuitive interface to control a virtual human figure interactively. Recent commercial pen devices can detect not only the pen positions but also the pressure and tilt of the pen. We utilize such information to make a human figure perform various types of motions in response to the pen movements manipulated by the user. A figure walks, runs, turns and steps along the trajectory and speed of the pen. The figure also bends, stretches and tilts in response to the tilt of the pen. Moreover, it ducks and jumps in response to the pen pressure. Using this interface, the user controls a virtual human figure intuitively as if he or she were holding a virtual puppet and playing with it.

In addition to the interface design, this paper describes a motion generation engine to produce various motions based on the parameters that are given by the pen interface. We take a motion blending approach and construct motion blending modules with a set of small number of motion capture data for each type of motions. Finally, we discuss about the effectiveness and limitations of the interface based on some preliminary experiments.

|

| Examples of the pen-based motion control interface. On each image the left view shows the controlled figure and the right view visualizes the movements of the pen manipulated by the user. The yellow stick shows pan tilt. The red arrow under the pen shows the orientation and velocity of the pen movements. The blue arrow shows the figure's orientation. (a) The figure walks and (b) runs in response to the pen movements. (c) The figure also tilts, bends, and stretches in response to the pen tilt. (d) If the pen is pressed onto the tablet for a while, the figure ducks in response to the pen pressure. (e) In addition, if the pen is pressed and released quickly, the figures jumps. (f) The jump height and distance are determined based on the pen pressure and the locomotion velocity when the pen is released. The user of our system can make the figure perform various motions through an intuitive interface. |